About Demo Project for Apache Camel - Hippo Event Bus Support

Problems Overview

Apache Camel - Hippo Event Bus Support component provides proper solutions for the architectural needs where you should update data in the external system on document events (such as publication/depublication) in Hippo CMS system.

The external systems may include the following in practice:

- Enterprise Search Engine (which has existed for search needs, integrating various systems)

- Content Caching Services (which requires proper cache invaliation calls by other systems)

- and more

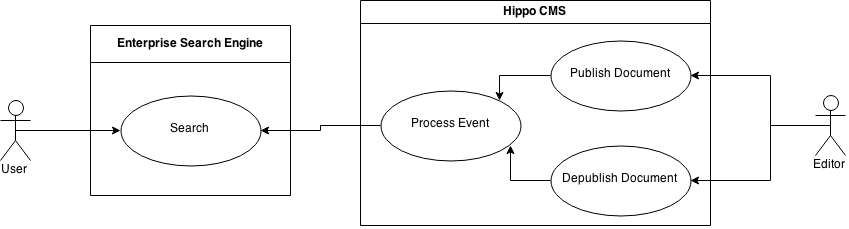

The most popular scenario is integrating with the existing Enterprise Search Engine. Bloomreach CMS is required to update search index data based on document publication/depublication events mostly, as shown in the diagram below.

If you implement an event listener registered to Event Bus, then it should look like almost the same as the diagram shown above. The event listener can probably invoke the external search engine (e.g, through either REST service or direct API call) directly in this approach.

However, this approach could bring the following architectural questions:

- If the external system becomes unavailable at some moment for some reason, then it may lose the event messages. How can we guarantee all the events processed? Reliability matters.

- If invocations on the external search engine may take longer than expected at some moment for some reason, then it may affect the performance (e.g, response time) in the CMS Frontend Application and the user experiences. How can we avoid this? Performance matters.

- We would like to focus only on our business logic instead of spending time on learning and maintaining Event Bus event listeners implementation in Java ourselves. Also, we might want to be able to change the event processing steps much faster in the future (e.g, get the job done within less than 2 hours to add e-mail notification task in the event processing steps). Modifiability matters.

- We would like to monitor the event processing and be able to manage it (e.g, turning it on/off). If we implement event listener Java code ourselves for the features, then it would increase complexity a lot. How can we achieve that? Manageability matters.

- and more

Apache Camel - Hippo Event Bus Support component is now provided to answer all those questions properly.

Solutions Overview

This demo project shows real solutions for the following scenarios:

- Demo 1: Solr Search Engine Integration

- Demo 2: ElasticSearch Search Engine Integration

- Demo 3: Running with ActiveMQ

The first demo shows integration with an Apache Solr search engine.

The second demo shows the same integration approach as the first scenario, but with ElasticSearch engine instead of Apache Solr search engine.

The third demo shows integration with either Apache Solr or ElasticSearch engine with using ActiveMQ as message queueing solution instead of file-based inbox folder polling.

Basically solutions are really simple thanks to Apache Camel and hippoevent: Apache Camel component.

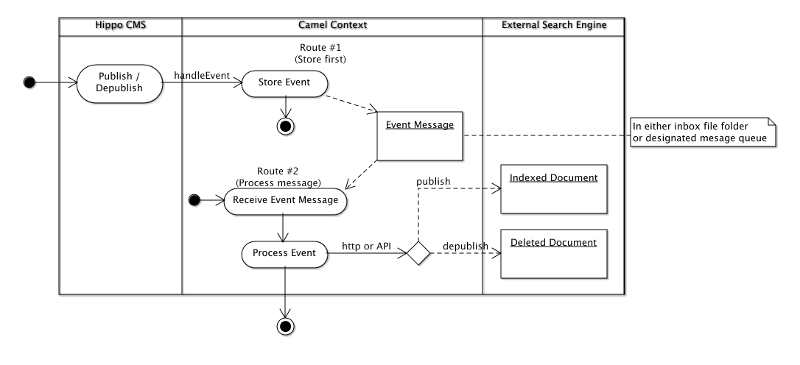

The idea in this demo project is to configure two Camel routes:

- one for storing HippoEventBus event into either a file in the designated folder or a message in the designated message queue.

- the other for invoking the specified REST service URL based on the (file or message queue) message.

Depending on the real use cases, you can configure more advanced complex routes (such as parallel, translation, etc), but the fundamental idea here is 'store first and forward later'.

An activity flow diagram in swimlanes would help understand this point:

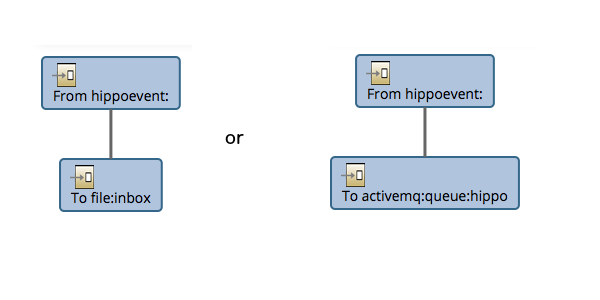

In the first route (either 'Route-HippoEventBus-to-File' or 'Route-HippoEventBus-to-Queue' in this demo project), hippoevent: component receives HippoEventBus event and converts it into a JSONObject. And, the next component (either 'file:' or 'activemq:' component in this demo project) in the route stores the JSON message into either file inbox folder or designated message queue.

Note: With the default running mode, the first component simply stores a Hippo Event into a JSON file in 'inbox' file folder. With ActiveMQ running mode, the first stores a Hippo Event into a JSON message in 'hippo' message queue instead.

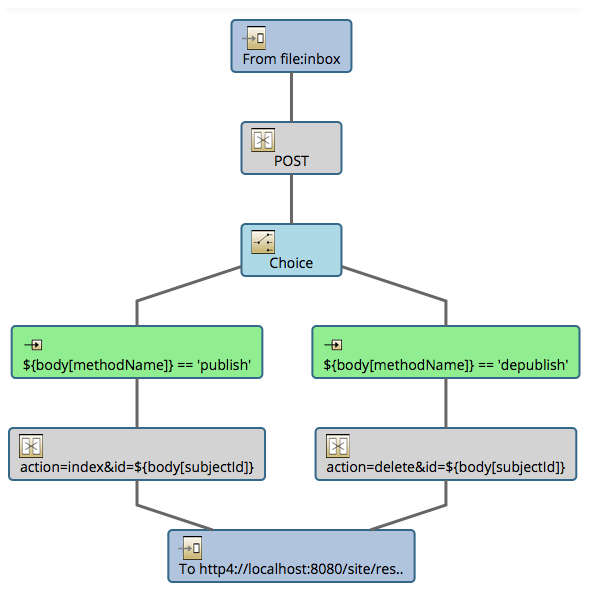

In the second route (either 'Route-File-to-REST' or 'REST-Queue-to-REST' in this demo project), a Camel component either polls a file from the inbox folder or receives a message from the designated message queue. And, the next component ('http:' in this demo project) makes an HTTP request to the designated REST service URL in order to either index a document or delete a document in the external search engine.

Note: With ActiveMQ running mode, the first component is replaced by 'from:activemq:hippo' in order to receive event messages from a message queue named 'hippo' in this demo project.

With this approach, you can fulfill the following quality attributes:

- Reliability: even if the external system becomes unavailable at some moment for some reasons, you don't lose any event message because all the failed messages are stored under either the designated folder or the designated message queue.

- Performance: by separating the process to multiple routes (store first and forward later), you can let the second route poll or receive the message and process asynchronously). This approach minimizes the impact on Hippo CMS/Repository performance.

- Modifiability: all the event processing is basically configured and executed by Apache Camel Context. You can configure any enterprise message integration patterns by leveraging various Apache Camel components. You can focus on your business logic. In this demo project, it is either SolrRestUpdateResource or ElasticSearchRestUpdateResource (each of which is implemented as HST REST service to create a SITE link and invoked by a 'http4' component in the second route to update the search engine index data).

Build Demo Project

You can build and install the module first with Maven.

$ mvn clean install

Now you can build and run the demo project from the submodule 'demo' with Maven and run the demo applications locally with Maven Cargo Plugin (Apache Tomcat embedded).

$ cd demo

$ mvn clean verify

$ mvn -P cargo.run

Web Applications in Demo Project

If you run the Demo project, then you will see the following web applications by default:

- CMS (/cms): brXM Content Authoring Web Application

- SITE (/site): brXM Content Delivery Web Application